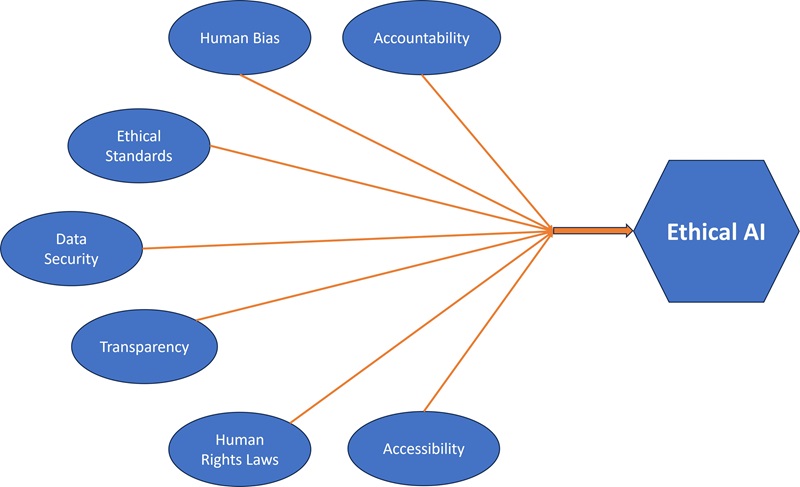

Ethical and privacy implications are becoming increasingly important during the current rapid progress in Artificial Intelligence (AI), particularly for generative AI systems. This has led to the growth of AI-centric models, for example, those used in creating content and personalisation, making it critical to find a balance that ensures innovation while safeguarding the user’s rights.

It is not only technologists and developers who are etddhically responsible when it comes to generative AI models. The creation of a society able to cope with such advanced technologies, including the widespread use of generative ai, will require collaboration between policymakers, ethicists, industry leaders, and the public through an open dialogue. Establishing robust ethical guidelines and creating efficient governance structures, education on AI capabilities, limitations, and the responsible use of generative AI is also essential for its development.

Ethical considerations and privacy concerns related to user-centric generative AI systems shall be explored in this blog, together with strategies for the sound implementation of such systems.

Understanding user-centric generative AI:

User-centric generative AI systems, including language models like GPT-3, have demonstrated remarkable capabilities in generating human-like content based on input data. These systems aim to provide personalised experiences, whether in content creation, recommendation engines, or conversational interfaces. However, as these technologies especially many generative AI models, it is essential to scrutinise their impact on ethical and privacy dimensions. New business risks, such as misinformation, plagiarism, copyright infringements and damaging content can also be created using generative AI technology.

Ethical Implications of Generative AI :

- Bias and fairness

- Transparency and clarity

- User consent and control

User-centric AI systems may inadvertently perpetuate biases present in training data, potentially leading to unfair or discriminatory outcomes. Addressing bias requires ongoing monitoring, transparent model development, and careful curation of training datasets.

The "black-box" nature of some AI models poses challenges in understanding how decisions are made. Prioritising transparency and clarity is essential to build trust and enable users to comprehend the rationale behind AI-generated content.

It is important to respect the autonomy of users. The priority of AI systems should be obtaining explicit consent to the use of data and providing users with a meaningful degree of control over the personalisation and creation processes.

Privacy concerns

- Data security

- Data minimisation

- Anonymity and de-identification

An essential aspect of ethical AI deployment is the protection of user data from unauthorised access. In order to protect sensitive information, there should be strong security measures such as encryption and control of access.

Collecting and storing only the necessary user data for AI training minimises privacy risks. Adopting principles of data minimisation ensures that only essential information is used, reducing the potential impact of data breaches.

Stripping personally identifiable information from user data before it reaches AI models adds an extra layer of privacy protection. Implementing anonymisation and de-identification techniques helps maintain user privacy while still benefiting from AI-driven personalisation.

Strategies for responsible implementation:

- Ethics by design

- Regular audits and assessments

- Gen AI implementation

In the design and development of new technology systems and devices, ‘Ethics by design’ is a systematic and comprehensive approach that includes ethical considerations. Although this approach may be applied to any technology, in the past it has focused on developing AI systems. Transparency, accountability, data protection and robustness are the common ethical principles which focus on technology suppliers. To ensure that generative AI remains safe for all users, it is essential to keep up to date with developments in these areas and engines and the debate on AI ethics.

Conduct regular ethical audits and impact assessments to identify and rectify potential biases, ensuring that the AI system aligns with ethical standards and privacy regulations. AI systems are just as good as what we're providing them with. If the data is distorted, it will also affect AI. Examples of common examples include: If a facial recognition system is trained primarily on photographs of lighter skinned individuals, it will not be effective for darker skin. Personally identifiable information (PII) and other sensitive data should be masked, so that it does not appear in the prompts.

While designing and developing generative AI use cases, businesses should take a 'responsible-first' approach. “It is essential that they adhere to a comprehensive and structured assessment of responsible AI and follow a human-in-loop approach when making critical inferences. Any ineffective/outdated regulatory data or measures for training the Gen AI model and its subsequent chain will impact recommendations.

Companies or organisations assess the potential usability of GPT models available in an open-source framework (Hugging Face). Unlike Chat GPT models, this would allow the open-source models to learn and improve only on the curated dataset within the company's or organisation’s infrastructure.

Conclusion:

In the dynamic landscape of user-centric generative AI, ethical and privacy considerations are not secondary concerns but integral components of responsible development. Navigating these concerns requires a proactive approach, combining ethical design, privacy-conscious practices, and ongoing collaboration with stakeholders. As we continue to harness the potential of AI, it is imperative to ensure that user-centric generative systems prioritise ethical values and respect user privacy, fostering a future where innovation and responsible AI coexist harmoniously. The potential of such a future, where technology is in the best interest of mankind, can be harnessed by combining an integrated ethical framework for developing and deploying AI.